From the literature review of in Shute (2018) Focus on Feedback we learned suggested practices for providing feedback on practice problems that are aimed to enhance learning.

The H5P toolset provides quite a variety of means to give feedback for correct and incorrect responses as well as more based on problems with multiple parts. The default response to them is “correct” or a green check mark or “incorrect” the red X/

In my first range of looking at a wide variety of published H5P content, I found however the majority of content that was created did not take advantage of the response specific and performance level feedback. To be honest, I thought that maybe the tool did not provide it. But after spending a lo more time creating examples, I am thinking more that the features are either (a) not apparent enough or (b) not understood.

This was not a vast research study! And I am not meaning to criticize authors as I have no context for the work they were doing.

I reviewed individual H5P content created at the eCampusOntario H5P Studio (it’s worth exploring for examples in your discipline or for content types). The filters allow some narrowing for these searches. I focused on content types that definitely provide response level feedback- Multiple Choice, True/False, and Question Set.

I reviewed a total of 22 items, and found only 6 (27%) that had some kind of feedback- all others were problems that simply reported “correct/incorrect”. Even out of these 6, several were minimal- they provided feedback only for a correct response or where the kind of praise oriented that Shute advises against e.g. “great work!” or for distractors the feedback was “wrong, try again.” One Question set had great feedback for only the first item.

One for practice with differences between 6th and 7th edition APA formatting had some responsive feedback

The next approach I took to investigating feedback was to look at published Pressbooks that contained somewhere between 30 or more H5P bits of content.

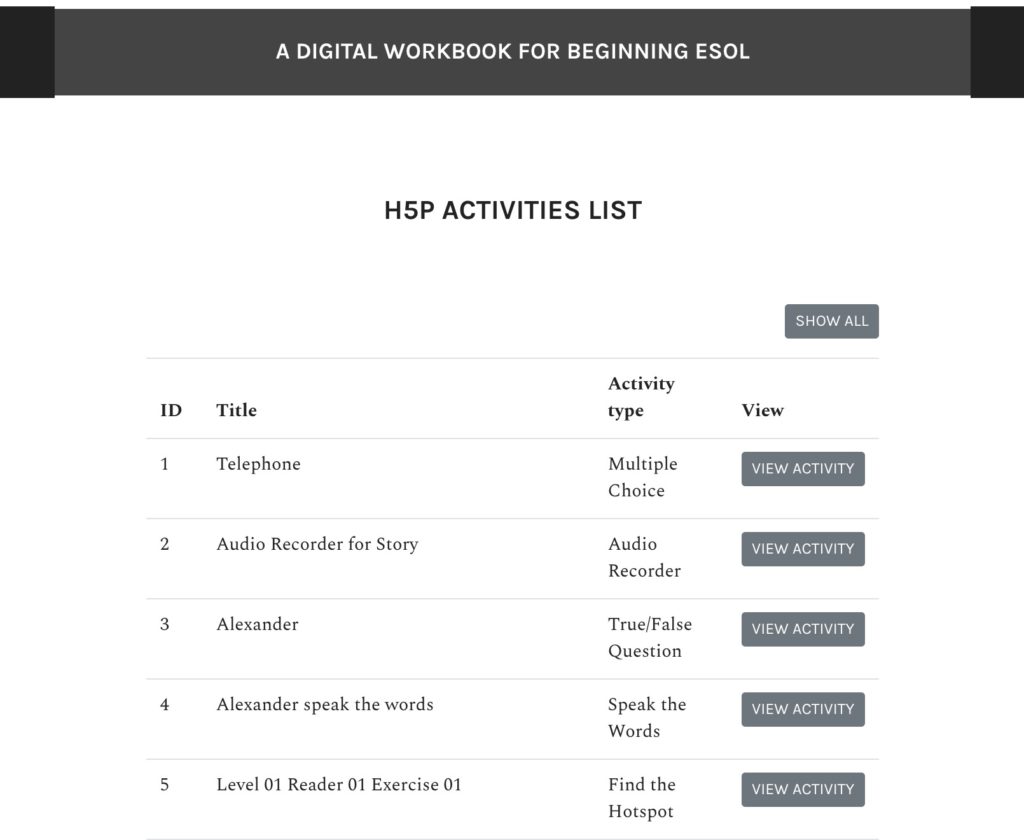

Here’s my nifty tricks for finding H5P content. For any thing published Pressbooks, like the Digital Workbook for ESOL, at this web address https://openoregon.pressbooks.pub/esol23/ if you add /h5p-listing to the URL check out what you get:

You can see what types are used, view them, even download (if enabled). This is a valuable way to explore all the H5P content that’s in a published Pressbook.

What it does not provide is seeing the H5P in context of the text; so the next URL trick is appending to the textbook URL ?s=h5p which essentially performs a site search on H5P. For the same ESOL Workbook, we can then look at where they sit in the textbooks (though it’s one more click to explore).

I only reviewed 10 different Pressbooks, and out of them, only 1 had extensive feedback provided; most again, published problems with feedback of green and red marks. I only looked at ones that had more than a few types that provide feedback.

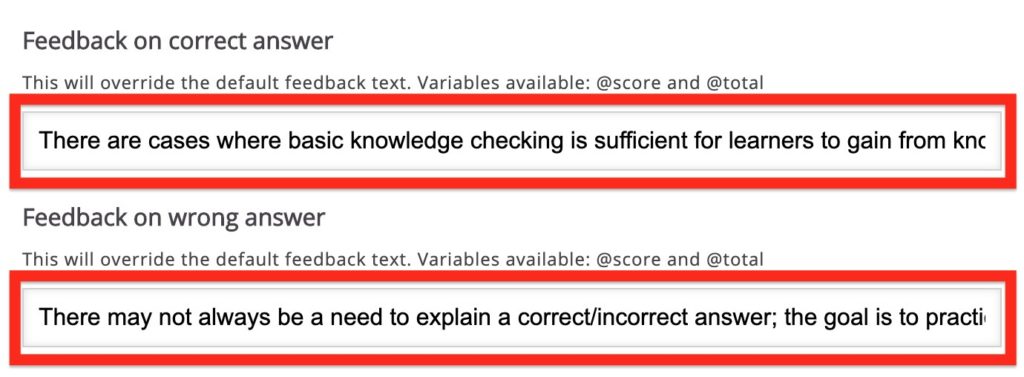

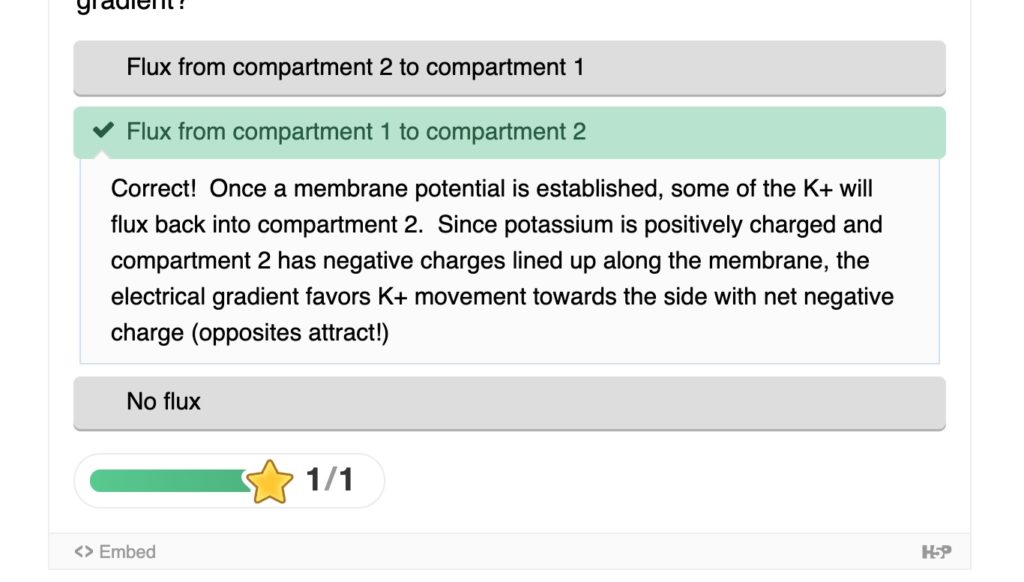

The one that I saw some of the best feedback was Business Writing For Everyone from Kwantlen Polytechnic University (go KPU!) See the listing of exercises for examples, like this true/false:

You can review my findings and criticize my conclusions! But I see a lot of under use of the feedback capability in H5P. So here I have created a few examples of content types that first lack feedback and than have them included.

Feedback in True/False Content Type

H5P provides a means to give feedback for both responses- should it be used?

Here is a first version without feedback.

Does this make a difference?

Despite what I wrote above, for some basic practice situations, the repetition of knowing you are correct might be enough reinforcement. It goes more to how the problems are set up. Is a learner motivated to learn why they were right or wrong, or are they just looking to “win” at picking the correct answer?

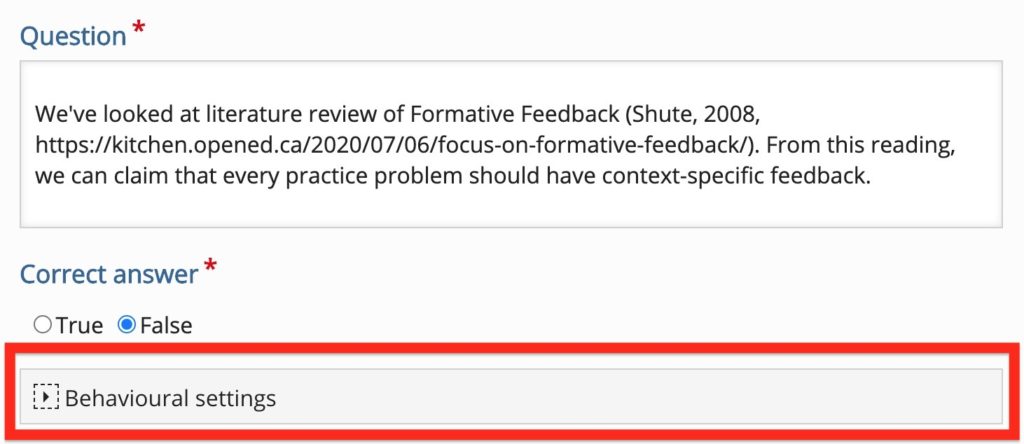

For the true/false question type in H5P, it’s obvious in the editor where to indicate the correct answer; but the fields for the feedback are lurking inside the tab labeled “Behavioural Settings”

Open this drawer to find the fields for response specific feedback, the first field is the feedback for the correct answer (in this case “false”) and the incorrect answer (“true”):

Perhaps the reason so few people use feedback on true/false questions are that they are buried? Or maybe it’s not always necessary for such basic question types.

Feedback in Multiple Choice Content Type

Multiple choice type items in H5P offer a way to provide feedback for the “alternatives”, the choices. Remember from the guide on multiple choice question construction we refer to the correct alternatives as the “answer” and the wrong ones as “distractors.”

Sometimes in H5P you find helpful feedback for correct answers, like this Chemistry multiple choice item

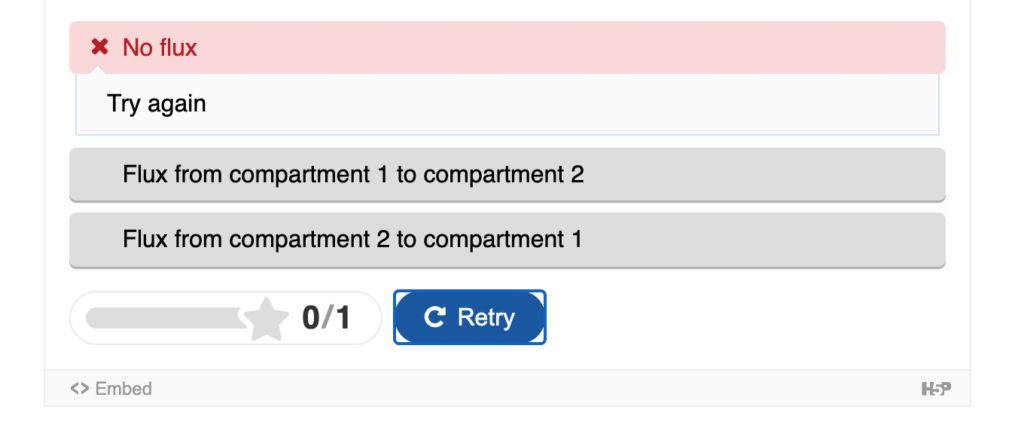

but they don’t provide helpful feedback for distractors- how does “try again” motivate anything beyond guessing at the other 2 answers?

Here is an example that has no feedback beyond the default correct (green check) and incorrect (red x). This is a problem where there is more than one correct answer:

Compare to this version that provides specific feedback on answers and distractors:

In addition, the blue text at the bottom provides a different kind of feedback depending if the learner got no correct answers, 1 correct answer, or 2 correct answers.

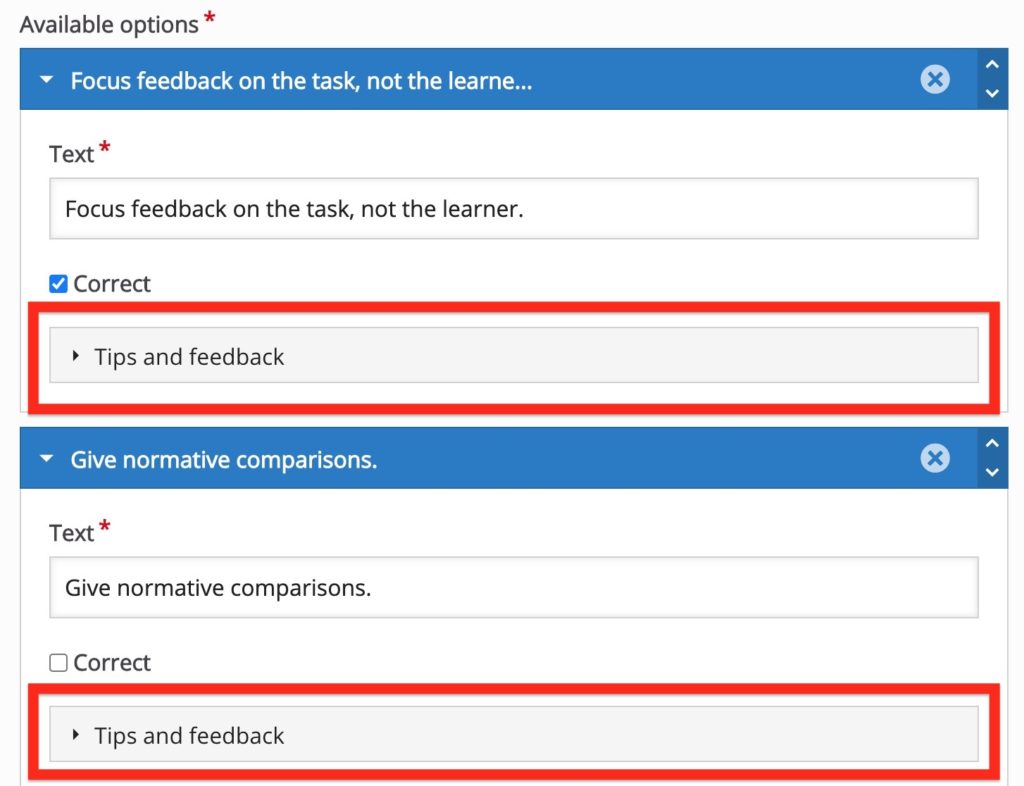

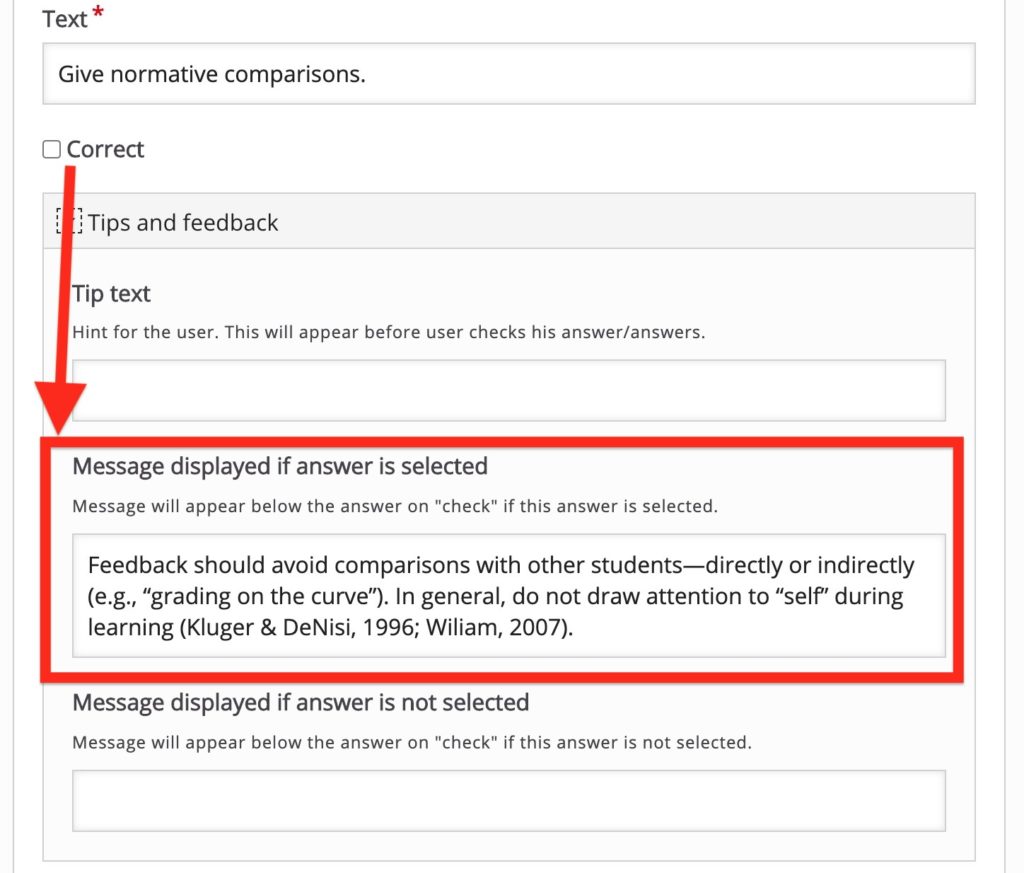

When creating a multiple choice item, like true and false, the H5P interface clearly indicates how to mark the answer that is correct. But the feedback options are hidden inside the tabs labeled “tips and feedback”

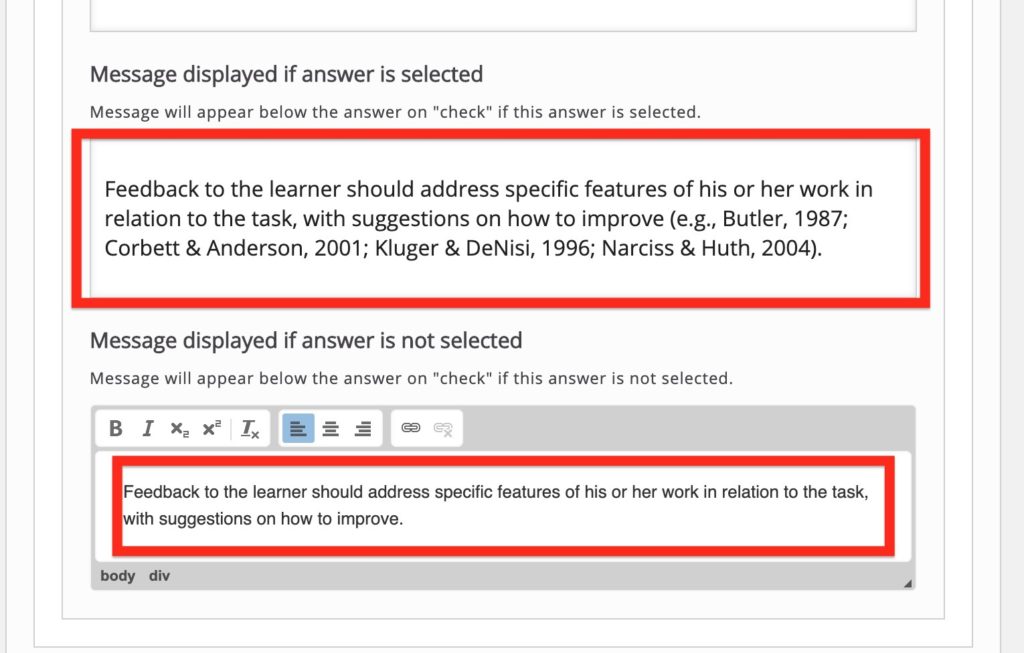

When we examine the options, we see how to construct feedback. One way to manage this is to just edit Tips and Feedback for the correct answer.

The first box is the feedback if the answer (the one checked correct) is chosen. The second box can provide feedback if any other distractor is selected. This works in cases where the same feedback is appropriate for any wrong answer.

The other approach, used in the example above, is to leave that second box blank, and provide specific feedback for each distractor (wrong answer); providing guidance for each one.

In this case, the message displayed is for this specific distractor.

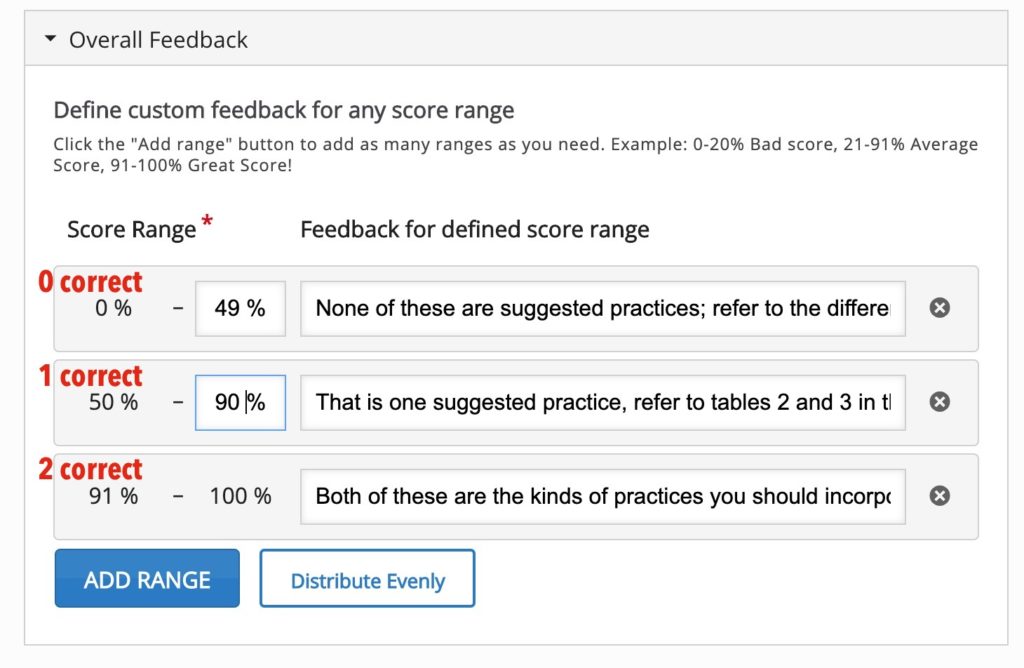

Creating an overall feedback is not always necessary and may be useful only in cases where there more more than one correct answer. In the example above, the possible scores for 2 items being correct are 0, 1, 2 out of 2. We use some math to define the ranges to match up to these as percentages (0%, 50%, or 100%).

This way we can provide more feedback if the learner got both wrong, one wrong, or both right:

You might notice that the examples provide under “Define custom feedback for any score range” are not really that useful– “Bad Score”?

The multiple choice items offers many ways to provide feedback. Why is it not used that often?

Feedback in Drag Words Content Type

The drag words content type provides a more complex type of problem that multiple choice. The default feedback again is simple right/wrong and a score.

That may be enough for practice. But in the H5P editor you can provide feedback for each right/wrong answer, and like above, an overall feedback summary based on the total score. Compare the example above with this version:

The feedback is provided in a table format below, but provides a way to target specific correct/incorrect answers.

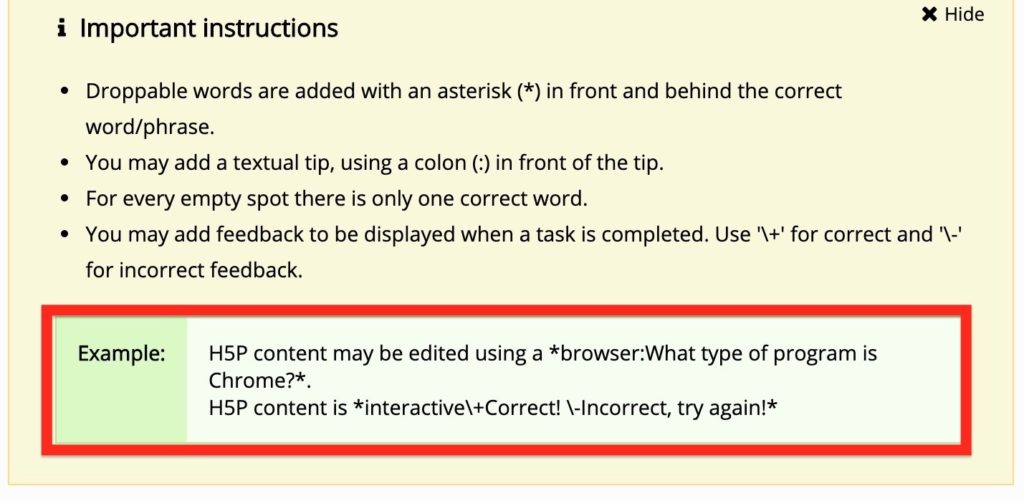

In the editor, the blanks are marked by putting asterisks around the correct answer. Most people stop there. The instructions explain how to add both a hint that appears on screen as well as the formatting for correct/incorrect answers. Note how the example provides feedback that is not really that useful beyond the default correct/incorrect:

The area for entering the content has in it this test, that uses the asterisks to define the correct dragged word, but makes no use of hints or feedback:

In technology-assisted instruction, similar to classroom settings, formative feedback comprises *information* —whether a message, display, and so on— presented to the *learner* following his or her *input* (or on request, if applicable) with the purpose of shaping the perception, cognition, or action of the learner . The main goal of formative feedback— whether delivered by a *teacher* or computer, in the classroom or elsewhere— is to enhance *learning*, performance, or both, engendering the formation of accurate, targeted conceptualizations and *skills*.

The setup for the second example, has the embedded feedback using the \+ and \- formatting:

In technology-assisted instruction, similar to classroom settings, formative feedback comprises *information\+Yes! feedback includes messages\-No, consider that there is content in feedback* —whether a message, display, and so on— presented to the *learner\+Yes it is for the person receiving instruction\-No. Who is this for?* following his or her *input* (or on request, if applicable) with the purpose of shaping the perception, cognition, or action of the learner . The main goal of formative feedback— whether delivered by a *teacher\+Yes, ideally the teacher is the provider of feedback or at least a director of it\-No. Who is the person who manages/decides on feedback?* or computer, in the classroom or elsewhere— is to enhance *learning\+Yes! This is the primary goal of course\-No, what is the thing we want to improve the most here?*, performance, or both, engendering the formation of accurate, targeted conceptualizations and *skills\+Yes, often we want the outcome to be things learners can understand but also do\-No, think of what things we want learners to be able to do*.

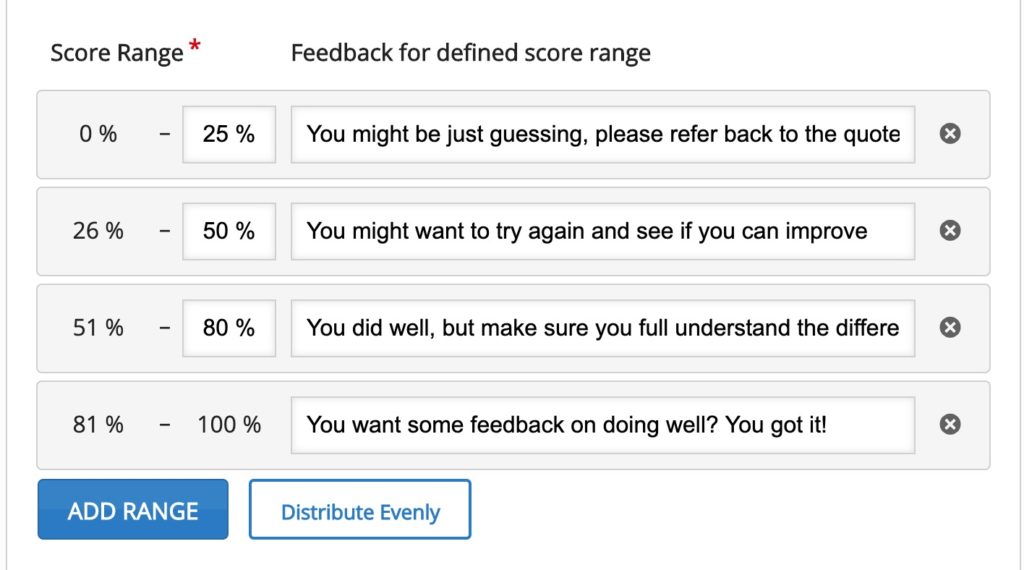

The overall summary feedback is optional, but since there are a wider range of possible scores in this question type; it often makes sense to provide some feedback on the overall score:

See Also

We discovered Connie Malamed’s suggestions for different kinds of feedback in Alternatives to Correct and Incorrect: Eight Approaches for Providing Leaning Feedback (eLearning Coach). Several of these are worth considering for incorporating in H5P activities in open textbooks.

- Provide Explanatory Feedback. If per item feedback is possible, explaining why it is correct/incorrect “You can apply explanatory feedback to any learning experience in which errors are caused by misconceptions or a lack of knowledge or skills. If your design has frequent opportunities for learners to respond, then you can catch and remediate misconceptions as the learner is constructing meaning.”

- Real World Consequences. Connect or incorporate the item into a case study or story of a situation. “For this type of learning, the ideal feedback replicates the consequences of the actions they choose. For example, in an emergency medicine training course, the choice of one drug results in stabilizing a patient whereas the choice of another drug results in dangerously high blood pressure levels. In a customer-service course, one response will satisfy a customer and another might leave a customer angry.”

- Offer Hints and Cues. Provide suggestions to move learners closer to the correct answer without giving it away. “the hint after a user chooses a specific incorrect response might be in the form of, ‘Have you considered all the factors in this situation?’ or ‘Is there another path you could take that would give better results?’ As you might expect, providing hints is most beneficial to learners who have lower prior knowledge.”

- Self-directed Feedback. This creates a thought process to reflect on a learners response. “For example, after requesting that learners write a short essay response to a question, provide an ideal response or specific criteria as feedback. Then let learners evaluate their own essay and compare it to the ideal. “

- Worked Examples as Feedback. Provide step by step instructions on how to solve a math problem or address a situation. “If the focus of your instruction is problem solving, you can provide worked-out examples for learners to study and then again as feedback after they solve a problem. Note that worked examples may not be effective for learners who are skilled at a task as it interferes with their ability to solve problems like an expert.”

One More Question

Image Credit: Pixabay image by bpcraddock